The way JavaScript handles Unicode is… surprising, to say the least. This write-up explains the pain points associated with Unicode in JavaScript, provides solutions for common problems, and explains how the ECMAScript 6 standard improves the situation.

Unicode basics

Before we take a closer look at JavaScript, let’s make sure we’re all on the same page when it comes to Unicode.

It’s easiest to think of Unicode as a database that maps any symbol you can think of to a number called its code point, and to a unique name. That way, it’s easy to refer to specific symbols without actually using the symbol itself. Examples:

Ais U+0041 LATIN CAPITAL LETTER A.ais U+0061 LATIN SMALL LETTER A.©is U+00A9 COPYRIGHT SIGN.☃is U+2603 SNOWMAN.💩is U+1F4A9 PILE OF POO.

Code points are usually formatted as hexadecimal numbers, zero-padded up to at least four digits, with a U+ prefix.

The possible code point values range from U+0000 to U+10FFFF. That’s over 1.1 million possible symbols. To keep things organised, Unicode divides this range of code points into 17 planes that consist of about 65 thousand code points each.

The first plane (U+0000 → U+FFFF) and is called the Basic Multilingual Plane or BMP, and it’s probably the most important one, as it contains all the most commonly used symbols. Most of the time you don’t need any code points outside of the BMP for text documents in English. Just like any other Unicode plane, it groups about 65 thousand symbols.

That leaves us about 1 million other code points (U+010000 → U+10FFFF) that live outside the BMP. The planes these code points belong to are called supplementary planes, or astral planes.

Astral code points are pretty easy to recognize: if you need more than 4 hexadecimal digits to represent the code point, it’s an astral code point.

Now that we have a basic understanding of Unicode, let’s see how it applies to JavaScript strings.

Escape sequences

You may have seen things like this before:

>> '\x41\x42\x43'

'ABC'

>> '\x61\x62\x63'

'abc'These are called hexadecimal escape sequences. They consist of two hexadecimal digits that refer to the matching code point. For example, \x41 represents U+0041 LATIN CAPITAL LETTER A. These escape sequences can be used for code points in the range from U+0000 to U+00FF.

Also common is the following type of escape:

>> '\u0041\u0042\u0043'

'ABC'

>> 'I \u2661 JavaScript!'

'I ♡ JavaScript!'These are called Unicode escape sequences. They consist of exactly 4 hexadecimal digits that represent a code point. For example, \u2661 represents U+2661 WHITE HEART SUIT. These escape sequences can be used for code points in the range from U+0000 to U+FFFF, i.e. the entire Basic Multilingual Plane.

But what about all the other planes — the astral planes? We need more than 4 hexadecimal digits to represent their code points… So how can we escape them?

In ECMAScript 6 this will be easy, since it introduces a new type of escape sequence: Unicode code point escapes. For example:

>> '\u{41}\u{42}\u{43}'

'ABC'

>> '\u{1F4A9}'

'💩' // U+1F4A9 PILE OF POOBetween the braces you can use up to six hexadecimal digits, which is enough to represent all Unicode code points. So, by using this type of escape sequence, you can easily escape any Unicode symbol based on its code point.

For backwards compatibility with ECMAScript 5 and older environments, the unfortunate solution is to use surrogate pairs:

>> '\uD83D\uDCA9'

'💩' // U+1F4A9 PILE OF POOIn that case, each escape represents the code point of a surrogate half. Two surrogate halves form a single astral symbol.

Note that the surrogate code points don’t look anything like the original code point. There are formulas to calculate the surrogates based on a given astral code point, and the other way around — to calculate the original astral code point based on its surrogate pair.

Using surrogate pairs, all astral code points (i.e. from U+010000 to U+10FFFF) can be represented… But the whole concept of using a single escape to represent BMP symbols, and two escapes for astral symbols, is confusing, and has lots of annoying consequences.

Counting symbols in a JavaScript string

Let’s say you want to count the number of symbols in a given string, for example. How would you go about it?

My first thought would probably be to simply use the length property.

>> 'A'.length // U+0041 LATIN CAPITAL LETTER A

1

>> 'A' == '\u0041'

true

>> 'B'.length // U+0042 LATIN CAPITAL LETTER B

1

>> 'B' == '\u0042'

trueIn these examples, the length property of the string happens to reflect the number of characters. This makes sense: if we use escape sequences to represent the symbols, it’s obvious that we only need a single escape for each of these symbols. But this is not always the case! Here’s a slightly different example:

>> '𝐀'.length // U+1D400 MATHEMATICAL BOLD CAPITAL A

2

>> '𝐀' == '\uD835\uDC00'

true

>> '𝐁'.length // U+1D401 MATHEMATICAL BOLD CAPITAL B

2

>> '𝐁' == '\uD835\uDC01'

true

>> '💩'.length // U+1F4A9 PILE OF POO

2

>> '💩' == '\uD83D\uDCA9'

trueInternally, JavaScript represents astral symbols as surrogate pairs, and it exposes the separate surrogate halves as separate “characters”. If you represent the symbols using nothing but ECMAScript 5-compatible escape sequences, you’ll see that two escapes are needed for each astral symbol. This is confusing, because humans generally think in terms of Unicode symbols or graphemes instead.

Accounting for astral symbols

Getting back to the question: how to accurately count the number of symbols in a JavaScript string? The trick is to account for surrogate pairs properly, and only count each pair as a single symbol. You could use something like this:

var regexAstralSymbols = /[\uD800-\uDBFF][\uDC00-\uDFFF]/g;

function countSymbols(string) {

return string

// Replace every surrogate pair with a BMP symbol.

.replace(regexAstralSymbols, '_')

// …and *then* get the length.

.length;

}Or, if you use Punycode.js (which ships with Node.js), make use of its utility methods to convert between JavaScript strings and Unicode code points. The punycode.ucs2.decode method takes a string and returns an array of Unicode code points; one item for each symbol.

function countSymbols(string) {

return punycode.ucs2.decode(string).length;

}In ES6 you can do something similar with Array.from which uses the string’s iterator to split it into an array of strings that each contain a single symbol:

function countSymbols(string) {

return Array.from(string).length;

}Or, using the spread operator ...:

function countSymbols(string) {

return [...string].length;

}Using any of those implementations, we’re now able to count code points properly, which leads to more accurate results:

>> countSymbols('A') // U+0041 LATIN CAPITAL LETTER A

1

>> countSymbols('𝐀') // U+1D400 MATHEMATICAL BOLD CAPITAL A

1

>> countSymbols('💩') // U+1F4A9 PILE OF POO

1Accounting for lookalikes

But if we’re being really pedantic, counting the number of symbols in a string is even more complicated. Consider this example:

>> 'mañana' == 'mañana'

falseJavaScript is telling us that these strings are different, but visually, there’s no way to tell! So what’s going on there?

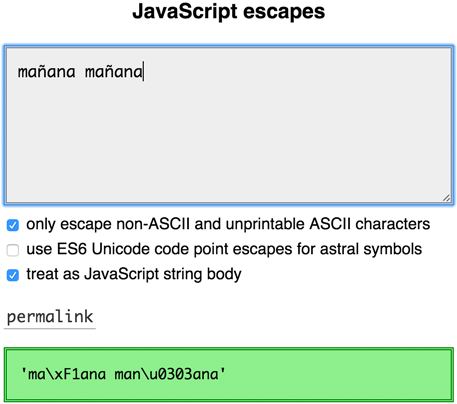

As my JavaScript escapes tool would tell you, the reason is the following:

>> 'ma\xF1ana' == 'man\u0303ana'

false

>> 'ma\xF1ana'.length

6

>> 'man\u0303ana'.length

7The first string contains U+00F1 LATIN SMALL LETTER N WITH TILDE, while the second string uses two separate code points (U+006E LATIN SMALL LETTER N and U+0303 COMBINING TILDE) to create the same glyph. That explains why they’re not equal, and why they have a different length.

However, if we want to count the number of symbols in these strings the same way a human being would, we’d expect the answer 6 for both strings, since that’s the number of visually distinguishable glyphs in each string. How can we make this happen?

In ECMAScript 6, the solution is fairly simple:

function countSymbolsPedantically(string) {

// Unicode Normalization, NFC form, to account for lookalikes:

var normalized = string.normalize('NFC');

// Account for astral symbols / surrogates, just like we did before:

return punycode.ucs2.decode(normalized).length;

}The normalize method on String.prototype performs Unicode normalization, which accounts for these differences. If there is a single code point that represents the same glyph as another code point followed by a combining mark, it will normalize it to the single code point form.

>> countSymbolsPedantically('mañana') // U+00F1

6

>> countSymbolsPedantically('mañana') // U+006E + U+0303

6For backwards compatibility with ECMAScript 5 and older environments, a String.prototype.normalize polyfill can be used.

Accounting for other combining marks

This still isn’t perfect, though — code points with multiple combining marks applied to them always result in a single visual glyph, but may not have a normalized form, in which case normalization doesn’t help. For example:

>> 'q\u0307\u0323'.normalize('NFC') // `q̣̇`

'q\u0307\u0323'

>> countSymbolsPedantically('q\u0307\u0323')

3 // not 1

>> countSymbolsPedantically('Z͑ͫ̓ͪ̂ͫ̽͏̴̙̤̞͉͚̯̞̠͍A̴̵̜̰͔ͫ͗͢L̠ͨͧͩ͘G̴̻͈͍͔̹̑͗̎̅͛́Ǫ̵̹̻̝̳͂̌̌͘!͖̬̰̙̗̿̋ͥͥ̂ͣ̐́́͜͞')

74 // not 6You could use a regular expression to remove any combining marks from the input string instead if a more accurate solution is needed.

// Note: replace the following regular expression with its transpiled equivalent

// to make it work in old environments. https://mths.be/bwm

var regexSymbolWithCombiningMarks = /(\P{Mark})(\p{Mark}+)/gu;

function countSymbolsIgnoringCombiningMarks(string) {

// Remove any combining marks, leaving only the symbols they belong to:

var stripped = string.replace(regexSymbolWithCombiningMarks, function($0, symbol, combiningMarks) {

return symbol;

});

// Account for astral symbols / surrogates, just like we did before:

return punycode.ucs2.decode(stripped).length;

}This function removes any combining marks, leaving only the symbols they belong to. Any unmatched combining marks (at the start of the string) are left intact. This solution works even in ECMAScript 3 environments, and it provides the most accurate results yet:

>> countSymbolsIgnoringCombiningMarks('q\u0307\u0323')

1

>> countSymbolsIgnoringCombiningMarks('Z͑ͫ̓ͪ̂ͫ̽͏̴̙̤̞͉͚̯̞̠͍A̴̵̜̰͔ͫ͗͢L̠ͨͧͩ͘G̴̻͈͍͔̹̑͗̎̅͛́Ǫ̵̹̻̝̳͂̌̌͘!͖̬̰̙̗̿̋ͥͥ̂ͣ̐́́͜͞')

6Accounting for other types of grapheme clusters

The above algorithm is still an oversimplification — it fails for grapheme clusters such as நி (ந + ி), Hangul made of conjoining Jamo such as 깍 (ᄁ + ᅡ + ᆨ), emoji sequences such as 👨👩👧👦 (👨 + U+200D ZERO WIDTH JOINER + 👩 + U+200D ZERO WIDTH JOINER + 👧 + U+200D ZERO WIDTH JOINER + 👦), or other similar symbols.

Unicode Standard Annex #29 on Unicode Text Segmentation describes an algorithm for determining grapheme cluster boundaries. For a completely accurate solution that works for all Unicode scripts, implement this algorithm in JavaScript, and then count each grapheme cluster as a single symbol. There is a proposal to add Intl.Segmenter, a text segmentation API, to ECMAScript.

Reversing strings in JavaScript

Here’s an example of a similar problem: reversing a string in JavaScript. How hard can it be, right? A common, very simple, solution to this problem is the following:

// naive solution

function reverse(string) {

return string.split('').reverse().join('');

}It seems to work fine in a lot of cases:

>> reverse('abc')

'cba'

>> reverse('mañana') // U+00F1

'anañam'However, it completely messes up strings that contain combining marks or astral symbols.

>> reverse('mañana') // U+006E + U+0303

'anãnam' // note: the `~` is now applied to the `a` instead of the `n`

>> reverse('💩') // U+1F4A9

'��' // `'\uDCA9\uD83D'`, the surrogate pair for `💩` in the wrong orderTo reverse astral symbols correctly in ES6, the string iterator can be used in combination with Array.from:

// slightly better solution that relies on ES6 StringIterator and `Array.from`

function reverse(string) {

return Array.from(string).reverse().join('');

}That still doesn’t solve the issues involving combining marks, though.

Luckily, a brilliant computer scientist named Missy Elliot came up with a bulletproof algorithm that accounts for these issues. It goes:

I put my thang down, flip it, and reverse it. I put my thang down, flip it, and reverse it.

And indeed: by swapping the position of any combining marks with the symbol they belong to, as well as reversing any surrogate pairs before further processing the string, the issues are avoided successfully. Thanks, Missy!

// using Esrever (https://mths.be/esrever)

>> esrever.reverse('mañana') // U+006E + U+0303

'anañam'

>> esrever.reverse('💩') // U+1F4A9

'💩' // U+1F4A9Issues with Unicode in string methods

This behavior affects other string methods, too.

Turning a code point into a symbol

String.fromCharCode allows you to create a string based on a Unicode code point. But it only works correctly for code points in the BMP range (i.e. from U+0000 to U+FFFF). If you use it with an astral code point, you’ll get an unexpected result.

>> String.fromCharCode(0x0041) // U+0041

'A' // U+0041

>> String.fromCharCode(0x1F4A9) // U+1F4A9

'' // U+F4A9, not U+1F4A9The only workaround is to calculate the code points for the surrogate halves yourself, and pass them as separate arguments.

>> String.fromCharCode(0xD83D, 0xDCA9)

'💩' // U+1F4A9If you don’t want to go through the trouble of calculating the surrogate halves, you could resort to Punycode.js’s utility methods once again:

>> punycode.ucs2.encode([ 0x1F4A9 ])

'💩' // U+1F4A9Luckily, ECMAScript 6 introduces String.fromCodePoint(codePoint) which does handle astral symbols correctly. It can be used for any Unicode code point, i.e. from U+000000 to U+10FFFF.

>> String.fromCodePoint(0x1F4A9)

'💩' // U+1F4A9For backwards compatibility with ECMAScript 5 and older environments, use a String.fromCodePoint() polyfill.

Getting a symbol out of a string

If you use String.prototype.charAt(position) to retrieve the first symbol in the string containing the pile of poo character, you’ll only get the first surrogate half instead of the whole symbol.

>> '💩'.charAt(0) // U+1F4A9

'\uD83D' // U+D83D, i.e. the first surrogate half for U+1F4A9There’s a proposal to introduce String.prototype.at(position) in ECMAScript 7. It would be like charAt except it deals with full symbols instead of surrogate halves whenever possible.

>> '💩'.at(0) // U+1F4A9

'💩' // U+1F4A9For backwards compatibility with ECMAScript 5 and older environments, a String.prototype.at() polyfill/prollyfill is available.

Getting a code point out of a string

Similarly, if you use String.prototype.charCodeAt(position) to retrieve the code point of the first symbol in the string, you’ll get the code point of the first surrogate half instead of the code point of the pile of poo character.

>> '💩'.charCodeAt(0)

0xD83DLuckily, ECMAScript 6 introduces String.prototype.codePointAt(position), which is like charCodeAt except it deals with full symbols instead of surrogate halves whenever possible.

>> '💩'.codePointAt(0)

0x1F4A9For backwards compatibility with ECMAScript 5 and older environments, use a String.prototype.codePointAt() polyfill.

Iterating over all symbols in a string

Let’s say you want to loop over every symbol in a string and do something with each separate symbol.

In ECMAScript 5 you’d have to write a lot of boilerplate code just to account for surrogate pairs:

function getSymbols(string) {

var index = 0;

var length = string.length;

var output = [];

for (; index < length; ++index) {

var charCode = string.charCodeAt(index);

if (charCode >= 0xD800 && charCode <= 0xDBFF) {

charCode = string.charCodeAt(index + 1);

if (charCode >= 0xDC00 && charCode <= 0xDFFF) {

output.push(string.slice(index, index + 2));

++index;

continue;

}

}

output.push(string.charAt(index));

}

return output;

}

var symbols = getSymbols('💩');

symbols.forEach(function(symbol) {

assert(symbol == '💩');

});Alternatively, you could use a regular expression like var regexCodePoint = /[^\uD800-\uDFFF]|[\uD800-\uDBFF][\uDC00-\uDFFF]|[\uD800-\uDFFF]/g; and iterate over the matches.

In ECMAScript 6, you can simply use for…of. The string iterator deals with whole symbols instead of surrogate pairs.

for (const symbol of '💩') {

assert(symbol == '💩');

}Unfortunately there’s no way to polyfill this, as for…of is a grammar-level construct.

Other issues

This behavior affects pretty much all string methods, including those that weren’t explicitly mentioned here (such as String.prototype.substring, String.prototype.slice, etc.) so be careful when using them.

Issues with Unicode in regular expressions

Matching code points and Unicode scalar values

The dot operator (.) in regular expressions only matches a single “character”… But since JavaScript exposes surrogate halves as separate “characters”, it won’t ever match an astral symbol.

>> /foo.bar/.test('foo💩bar')

falseLet’s think about this for a second… What regular expression could we use to match any Unicode symbol? Any ideas? As demonstrated, . is not sufficient, because it doesn’t match line breaks or whole astral symbols.

>> /^.$/.test('💩')

falseTo match line breaks correctly, we could use [\s\S] instead, but that still won’t match whole astral symbols.

>> /^[\s\S]$/.test('💩')

falseAs it turns out, the regular expression to match any Unicode code point is not very straight-forward at all:

>> /[\0-\uD7FF\uE000-\uFFFF]|[\uD800-\uDBFF][\uDC00-\uDFFF]|[\uD800-\uDBFF](?![\uDC00-\uDFFF])|(?:[^\uD800-\uDBFF]|^)[\uDC00-\uDFFF]/.test('💩') // wtf

trueOf course, you wouldn’t want to write these regular expressions by hand, let alone debug them. To generate the previous regex, I’ve used Regenerate, a library to easily create regular expressions based on a list of code points or symbols:

>> regenerate().addRange(0x0, 0x10FFFF).toString()

'[\0-\uD7FF\uE000-\uFFFF]|[\uD800-\uDBFF][\uDC00-\uDFFF]|[\uD800-\uDBFF](?![\uDC00-\uDFFF])|(?:[^\uD800-\uDBFF]|^)[\uDC00-\uDFFF]'From left to right, this regex matches BMP symbols, or surrogate pairs (astral symbols), or lone surrogates.

While lone surrogates are technically allowed in JavaScript strings, they don’t map to any symbols by themselves, and should be avoided. The term Unicode scalar values refers to all code points except for surrogate code points. Here’s a regular expression is created that matches any Unicode scalar value:

>> regenerate()

.addRange(0x0, 0x10FFFF) // all Unicode code points

.removeRange(0xD800, 0xDBFF) // minus high surrogates

.removeRange(0xDC00, 0xDFFF) // minus low surrogates

.toRegExp()

/[\0-\uD7FF\uE000-\uFFFF]|[\uD800-\uDBFF][\uDC00-\uDFFF]/Regenerate is meant to be used as part of a build script, to create complex regular expressions while still keeping the script that generates them very readable and easily to maintain.

ECMAScript 6 will hopefully introduce a u flag for regular expressions that causes the . operator to match whole code points instead of surrogate halves.

>> /foo.bar/.test('foo💩bar')

false

>> /foo.bar/u.test('foo💩bar')

trueNote that . still won’t match line breaks, though. When the u flag is set, . is equivalent to the following backwards-compatible regular expression pattern:

>> regenerate()

.addRange(0x0, 0x10FFFF) // all Unicode code points

.remove( // minus `LineTerminator`s (https://ecma-international.org/ecma-262/5.1/#sec-7.3):

0x000A, // Line Feed <LF>

0x000D, // Carriage Return <CR>

0x2028, // Line Separator <LS>

0x2029 // Paragraph Separator <PS>

)

.toString();

'[\0-\t\x0B\f\x0E-\u2027\u202A-\uD7FF\uE000-\uFFFF]|[\uD800-\uDBFF][\uDC00-\uDFFF]|[\uD800-\uDBFF](?![\uDC00-\uDFFF])|(?:[^\uD800-\uDBFF]|^)[\uDC00-\uDFFF]'

>> /foo(?:[\0-\t\x0B\f\x0E-\u2027\u202A-\uD7FF\uE000-\uFFFF]|[\uD800-\uDBFF][\uDC00-\uDFFF]|[\uD800-\uDBFF](?![\uDC00-\uDFFF])|(?:[^\uD800-\uDBFF]|^)[\uDC00-\uDFFF])bar/u.test('foo💩bar')

trueAstral ranges in character classes

Considering that /[a-c]/ matches any symbol from U+0061 LATIN SMALL LETTER A to U+0063 LATIN SMALL LETTER C, it might seem like /[💩-💫]/ would match any symbol from U+1F4A9 PILE OF POO to U+1F4AB DIZZY SYMBOL. This is however not the case:

>> /[💩-💫]/

SyntaxError: Invalid regular expression: Range out of order in character classThe reason this happens is because that regular expression is equivalent to:

>> /[\uD83D\uDCA9-\uD83D\uDCAB]/

SyntaxError: Invalid regular expression: Range out of order in character classInstead of matching U+1F4A9, U+1F4AA, and U+1F4AB like we wanted to, instead the regex matches:

- U+D83D (a high surrogate), or…

- the range from U+DCA9 to U+D83D (which is invalid, because the starting code point is greater than the code point marking the end of the range), or…

- U+DCAB (a low surrogate).

ECMAScript 6 allows you to opt in to the more sensical behavior by — once again — using the magical /u flag.

>> /[\uD83D\uDCA9-\uD83D\uDCAB]/u.test('\uD83D\uDCA9') // match U+1F4A9

true

>> /[\u{1F4A9}-\u{1F4AB}]/u.test('\u{1F4A9}') // match U+1F4A9

true

>> /[💩-💫]/u.test('💩') // match U+1F4A9

true

>> /[\uD83D\uDCA9-\uD83D\uDCAB]/u.test('\uD83D\uDCAA') // match U+1F4AA

true

>> /[\u{1F4A9}-\u{1F4AB}]/u.test('\u{1F4AA}') // match U+1F4AA

true

>> /[💩-💫]/u.test('💪') // match U+1F4AA

true

>> /[\uD83D\uDCA9-\uD83D\uDCAB]/u.test('\uD83D\uDCAB') // match U+1F4AB

true

>> /[\u{1F4A9}-\u{1F4AB}]/u.test('\u{1F4AB}') // match U+1F4AB

true

>> /[💩-💫]/u.test('💫') // match U+1F4AB

trueSadly, this solution isn’t backwards compatible with ECMAScript 5 and older environments. If that is a concern, you should use Regenerate to generate ES5-compatible regular expressions that deal with astral ranges, or astral symbols in general:

>> regenerate().addRange('💩', '💫')

'\uD83D[\uDCA9-\uDCAB]'

>> /^\uD83D[\uDCA9-\uDCAB]$/.test('💩') // match U+1F4A9

true

>> /^\uD83D[\uDCA9-\uDCAB]$/.test('💪') // match U+1F4AA

true

>> /^\uD83D[\uDCA9-\uDCAB]$/.test('💫') // match U+1F4AB

trueUpdate: Another option is to transpile your code using regexpu or a transpiler that includes regexpu. I wrote a separate blog post with more details on Unicode-aware regular expressions in ES6.

Real-world bugs and how to avoid them

This behavior leads to many issues. Twitter, for example, allows 140 characters per tweet, and their back-end doesn’t mind what kind of symbol it is — astral or not. But because the JavaScript counter on their website at some point simply read out the string’s length without accounting for surrogate pairs, it wasn’t possible to enter more than 70 astral symbols. (The bug has since been fixed.)

Many JavaScript libraries that deal with strings fail to account for astral symbols properly.

For example, when Countable.js was released, it didn’t count astral symbols correctly.

Underscore.string has an implementation of reverse that doesn’t handle combining marks or astral symbols. (Use Missy Elliot’s algorithm instead.)

It also incorrectly decodes HTML numeric entities for astral symbols, such as 💩. Lots of other HTML entity conversion libraries have similar problems. (Until these bugs are fixed, consider using he instead for all your HTML-encoding/decoding needs.)

These are all easy mistakes to make — after all, the way JavaScript handles Unicode is just plain annoying. This write-up already demonstrated how these bugs can be fixed; but how can we prevent them?

Introducing… The Pile of Poo Test™

Whenever you’re working on a piece of JavaScript code that deals with strings or regular expressions in some way, just add a unit test that contains a pile of poo (💩) in a string, and see if anything breaks. It’s a quick, fun, and easy way to see if your code supports astral symbols. Once you’ve found a Unicode-related bug in your code, all you need to do is apply the techniques discussed in this post to fix it.

A good test string for Unicode support in general is the following: Iñtërnâtiônàlizætiøn☃💩. Its first 20 symbols are in the range from U+0000 to U+00FF, then there’s a symbol in the range from U+0100 to U+FFFF, and finally there’s an astral symbol (from the range of U+010000 to U+10FFFF).

TL;DR Go forth and submit pull requests with piles of poo in them. It’s the only way to Unicode the Web Forward®.

Slides

This write-up summarizes the various presentations I’ve given on the subject of Unicode in JavaScript over the past few years. The slides I used for those talks are embedded below.

Want me to give this presentation at your meetup/conference? Let’s talk.

Translations

- Korean: 자바스크립트의 유니코드 문제 by Eugene Byun

Comments

Erik Arvidsson wrote on :

Great post.

Unfortunately the

uflag for regexps is probably not making it into ES6. The spec text is missing and there is no champion.This might not be the right medium to ask but will you be the champion for this?

Mathias wrote on :

Erik:

Sounds like fun! What exactly does this entail, though? I’d definitely love to help out where I can. (Sent you an email.)

Niloy Mondal wrote on :

Why is ES6 introducing

fromCodePoint— can’t we simply fix the code infromCharCode?Mathias wrote on :

Niloy: Unfortunately, changing the behavior of all the existing string methods and properties would break backwards compatibility. Code that relies on the current behavior would break. Adding new methods and properties is the only option.

Joshua Tenner wrote on :

Mathias: I understand the reason for this, but it’s the same logic as: “My code is a pile of poo, instead of fixing it, I’m going to make more potential piles of poo, but it’s good piles of poo for now.”

Sarcasm aside, great article!

Tab Atkins Jr. wrote on :

Joshua: Not quite. It’s not your code you’re worried about, it’s all the other millions of independent pieces of code relying on your code. We can’t fix all of them, so we have to leave the bad code in and just offer a migration path to something better.

Josef wrote on :

Couldn’t the behavior be implemented with something like Strict Mode?

Mathias wrote on :

Josef: No, as existing strict mode code relies on the behavior as well. You’d have to introduce yet another mode, but that complicates the language even more, and there is no point anyway since you could just add other methods and properties instead.

Anup wrote on :

Thanks for this article. We discovered some of these issues a few weeks back when looking into what issues our app might have supporting Thai and weren’t sure how to proceed.

This article may give us a few useful things to pursue now. (We have to also ensure if we make changes to our JavaScript layer that our server layer supports such changes in a consistent way — e.g., simple-sounding string length validation we have in both layers…)

Thanks!

Nicolas Joyard wrote on :

Great article, thanks.

Of course fixing existing methods is not an option, but they could at least be changed to log warnings in browser consoles.

Marijn wrote on :

Your combining marks regexp seems to miss most extending characters. Here’s the one CodeMirror uses: https://github.com/marijnh/CodeMirror/blob/b78c9d04fc8b3/lib/codemirror.js#L6780 (which I generated from the Unicode database, including all code points marked

Gr_Ext).Neil Rashbrook wrote on :

Those regexps seem unnecessarily complicated. Take these examples:

I would write them as:

Nux wrote on :

Hi. Just to let you know — Opera 12 works fine with combining marks (the ones from the section ‘Accounting for other combining marks’).

Also note that the real horror story is almost any other language. For example PHP handles Unicode horribly. You need a MultiByte library even for UTF-8, and it’s not available by default. That’s why UTF-16 doesn’t sound that much of a problem especially since most Unicode characters are not rendered even by the top browsers.

C. Scott Ananian wrote on :

You say “there’s no way to polyfill this” when discussing

StringIteratorandfor…of— but in fact es6-shim provides an effective polyfill by allowing you to access theStringIteratorviaArray.from. That is, once you loades6-shimyou can iterate over the results ofArray.from(string).Mathias wrote on :

Scott: Sure, iterators can be implemented in ES5, but not the

for…ofsyntax. As I said in the text:That said, what es6-shim is doing is really awesome. Keep up the nice work!

T1st3 wrote on :

Hopefully, JavaScript has no unicorn problem!

(worst comment ever)

Donald wrote on :

I’d like to save your presentation on my computer. Can I? I don’t see a function to do it.

GottZ wrote on :

Neil Rashbrook: depends. if you want to match a specified number of chars, your short one would fail at astral symbols because it matches as two. if it does not matter how many chars you want to match you can just use . instead of your short one

Fabio wrote on :

I noticed in Firefox 32, the combining version of mañana incorrectly displays the tilde over the second a in the text above (but correctly over the n when pasted here...)

Cees Timmerman wrote on :

Fabio: Reported; thanks.

Orlin Georgiev wrote on :

Thanks for the awesome article! Here is an implementation of UAX-29 that properly counts and splits graphemes, without any “over-simplifications”: https://github.com/orling/grapheme-splitter You can include it in your article as someone may need it. I needed it, and since I found no publicly available JS implementation, had to roll this one on my own. No point in others reinventing the wheel, though!

Blizzardengle wrote on :

@Mathias: on your Git repo, would it be possible to point out you need to hex-escape your strings before encoding as well as use an

evalon decoding? It took me a while to pick apart your demo page and figure that out.Orlin Georgiev: thanks for that comment and link. That was one route I was researching for a project that wasn’t covered in this article.

Erik Evrard wrote on :

Thanks for this excellent post. I learned a lot, and you actually solved a problem that has been bothering me for a while.

I wanted to parse an HTML file that contains an e with a diaeresis, and I couldn’t get a match with a JS regular expression. It turned out it was not

\u00CB(2 bytes), but insteade\u0308(e with a combined diaeresis) (3 bytes). I had noticed that it matched 3 bytes instead of 2 (by using/.{3}/) but I couldn’t understand why. Your mañana example provided me with the hint that I needed!Keep up the good work, your blog is outstanding.

Erik / Ghent, Belgium

Flimm wrote on :

Great post! I would ask that you mention which REPL you’re using before showing your first REPL example. If you’re using Node’s REPL in the terminal, you could have all sorts of weird issues if your terminal isn’t set up correctly for non-ASCII characters. Also, I’d include these examples in the counting symbols section for completeness:

mirabilos wrote on :

No, U+nnnn has exactly four digits. Your PILE OF POO must be written as U-0001F4A9 instead (exactly eight digits); cf. https://twitter.com/mirabilos/status/657549753140514816.

Mathias wrote on :

mirabilos: Only according to version 3 of the Unicode standard, which dates back to 2000. Nowadays even the Unicode standard itself uses the

U+nnnnnotation for any code point.Anthony Rutledge wrote on :

Thank you for your great article on JavaScript’s Unicode problem. I do not have time to read all of it right now, but I will. I thoroughly enjoyed the slide show. Well done.

Edward J wrote on :

Thanks for your great detailed yet understandable explanation describing the JavaScript Unicode issue and ways to solve it. The code from your useful page allowed me to solved a problem with lengths of strings containing Unicode astral characters. By the way, it was good enough to be the first Google search result for my query “how to handle two code point unicode character string length in javascript?”.

Haroen Viaene wrote on :

Turning a string into an array already helps with dealing with multibyte characters, like pile of poo, but then we have characters like “woman with skin color running” (

🏃🏽♀️) . This symbol has alengthof7, spread out over 5 code points:Now I’d like to have an implementation that keeps this character together. Here we have the skin color modifier that is causing complexity, the ZWJ before the female sign and the VS-15 to show it as an icon.

Thanks if you could help here ☺️

Mathias wrote on :

Haroen: Sounds like you’re looking for text segmentation.

YingshanDeng wrote on :

Hello 😀 In this article, I found several ways to count emojis in a string. And it works for almost all emoji, but I found some emoji for which it doesn’t work. For example: ❤️ 2️⃣

How can this be solved?

Mathias wrote on :

YingshanDeng: That’s the same question ☺️ You’re looking for text segmentation.

Caleb wrote on :

If I have

'💩'.codePointAt(0).toString(16), that outputs a valid code, but if I do'💩'.codePointAt(1).toString(16)that still outputs the second surrogate character. Am I doing this correctly?Mathias wrote on :

Caleb: To get the code point for each “whole” symbol in the string, iterate over each code point in the string and call

.codePointAt(0)on each individual symbol.